One day I had interesting chat with a colleague. At work, we have windows and mac computers supported by our IT department. You can also run linux but in that case you’re mostly on your own. Meaning if you have problems you need to figure it out yourself.

While it is ok for most of linux users it also keeps people away from linux. At least I’m overaged for tweaking and debugging my work laptop. I rather like to do my work.

It wasn’t always so though. Getting old but also getting wiser 😉

Well, anyway. We were discussing how to run linux on our laptop. We knew that we can unistall windows or install linux to multiboot but getting the image and running the installation – with extremely fast office network – it will take over an hour to get Ubuntu installed. So we don’t want to. We like to do things. Not wait for things.

Why not spin up one virtual machine from Google Cloud with pre-installed Ubuntu image? You can bundle it with a GPU if you want to and install all kinds of bells and whistles and use some kind of x-forwarding or vnc to connect to your VM.

That’s what we did. And voilà, we had our linux running fullscreen on our windows laptop under 10 minutes. And not only one laptop. We could have multiple users in that linux box and use them in the same time. We felt like we invented something.

Welcome to 1980s

Idea of thin clients and running loads in the “cloud”, or in a mainframe if you will, is not that new idea. But it feels that it’s now coming to us again.

A big majority of new projects / solutions / systems are run in cloud environment. There are less and less people who want to manage their own server farms. I don’t want to manage even my servers running this WordPress. That why I’m using Opalstack – a perfect alternative to Webfaction. (Wasn’t that a smooth trick? 🙂 )

But companies still largely rely on physical, probably leased, computers. Developers require more and more power and use it seldomly. Compiling is faster when you have enough power but then most of the time that power is just idling there. And same goes with almost every so called power user. Yes, they need power. But not all the time.

If we compare that to cloud VM instances (one like the linux box I mentioned before) it certainly feels waste. In 2020, would you boot up a VM with capability to handle your peak loads and be happy when running it 24/7? Or would you rather build some scalability there and optimize amount of VMs (if you even end up using VMs) over time? Money might not even be the issue. It just feels wrong.

Same goes with our Linux box in Google Cloud. It feels really wrong to keep it running 24/7. It so cloud un-native. Manually stopping it also makes you feel sad. This is not great. It won’t be available instantly when I will need it! I don’t want to wait for things. I want to do things.

Containers and Sandboxes

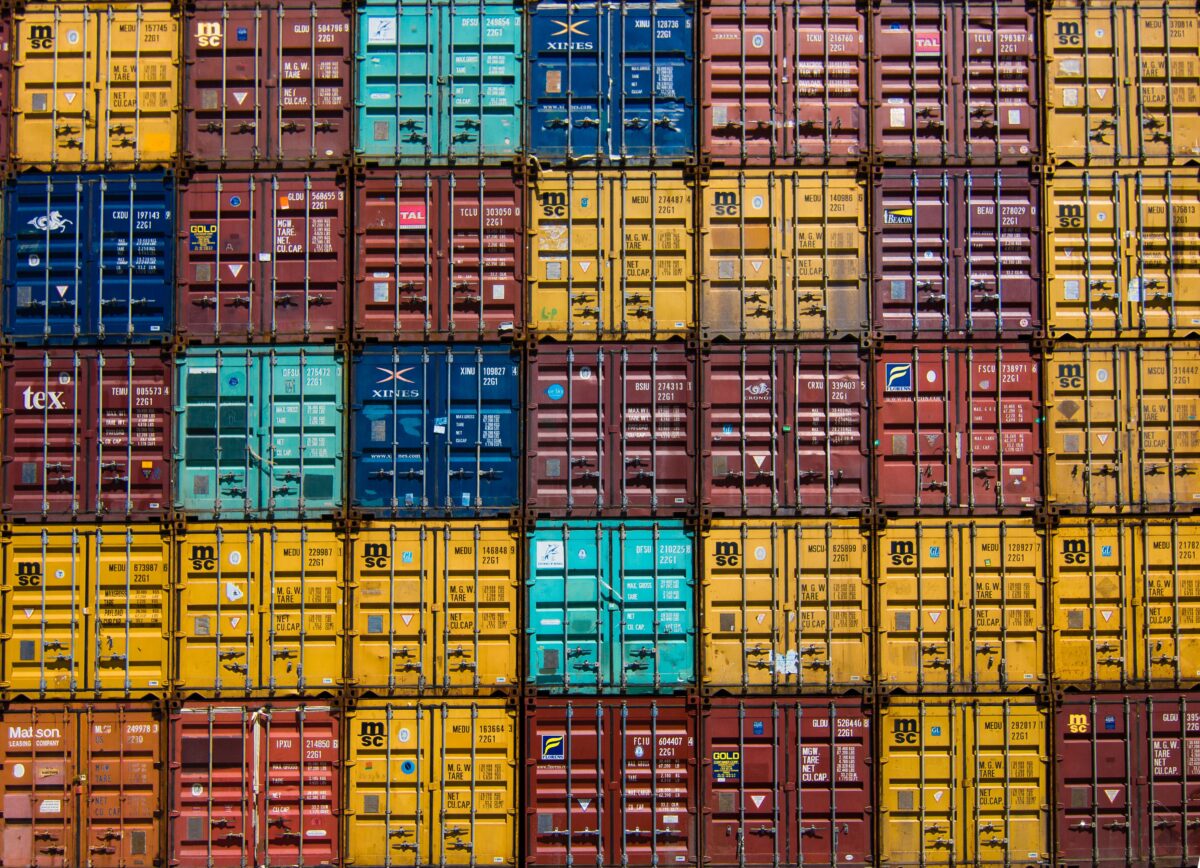

I’d say that I know nothing about containers. Except they can be shipped around and sometimes found floating in the sea if there have been harsh weather. Still I’m going to use word container multiple times. Sorry.

Look at Chrome OS. It advertises itself as an OS which runs applications in sandboxes. If one application gets hit by a virus or something it cannot spread over sandbox fences. Great.

So all the applications are more or less running its own. Somehow (by magic, I assume) they get services from the OS. Other than that, they’re completely isolated from the OS or other apps. The concept is not very far from a situation where we could have this kind of OS running in the cloud in some cheap ass VM (or in some PaaS awesomeness) and when launching apps they will be run in separate containers running in the cloud. And when I use word container, don’t think only Docker. Be open minded.

Think of it. Your thin client would be something that just has excellent user interface, good battery and connectivity. Your work “computer” would be this launcherOS in the cloud. And each application would be started in its own container with its own settings related to CPU power, memory, GPU etc. Maybe even the software itself could describe what kind of resources, and how much, it wants.

You could run your simple apps with very cheap solutions. And if you needed some power every now and then it would be immediately available for you. It comes with a price of course but so it does these physical power houses as well. It also comes with flexibility. There’s no reason for update your computer because you need more power. You have it already. Just use it. Who wants to use days to setup a new computer when you could completely avoid it? Maybe Chromebooks are the future..

Why this is not happening?

Once again I feel like I’ve invented something new. Well, I know I haven’t. But at the same time I’m wondering why isn’t this kind of approach getting more traction? I understand that OSes are not there yet but we have less and less reasons to buy expensive machines with lot of memory and cpu when we just would need a thing that can run full screen remote desktop.

But what if you need offline access? Well.. How many of you developers can work without internet connection? How many of you can live without access to ci/cd pipes or github or JIRA or Stackoverflow?

And if you are guy like me whose work is to generate content to Powerpoints and excel, those can be run also locally – at least simple ones. But even those excels are more and more online nowadays. They’re connected to datasources or whatever.

Requiring working internet access can prevent us trusting this kind of solution. But what else? Wouldn’t it be reasonable to first start running desktop OSes in the cloud and some day get them more and more cloud native? It would feel like future. Hopefully it happens soon.

Man, I need to buy one of those Chromebooks.